라즈베리파이4에 라즈비안 64bit (bullseye) 를 설치하고, 그 위에 쿠버네티스 환경을 셋업해보려고 했습니다.

하지만, 몇가지 설정을 잡는데에서 한계를 느껴 결국 64bit를 포기하고 32bit로 재설치를 진행했습니다.

어떤 문제들이 있었는지는, 아래 포스팅을 참고하십시요.

2022.08.02 - [Raspberry pi] - 라즈베리파이4 라즈비안 64bit 쿠버네티스 설치 실패

라즈베리파이4 라즈비안 64bit 쿠버네티스 설치 실패

라즈베리파이4에 야심차게 64비트 라즈비안을 설치했지만 2022.07.27 - [Raspberry pi] - 라즈베리파이 4 라즈비안 64bit 설치 : SSH 최초 접속 : 스펙 확인 라즈베리파이 4 라즈비안 64bit 설치 : SSH 최초 접속

viewise.tistory.com

다시 라즈비안 32bit 이미지부터 굽기 시작합니다.

설치한 버전은

PRETTY_NAME="Raspbian GNU/Linux 11 (bullseye)"

NAME="Raspbian GNU/Linux"

VERSION_ID="11"

VERSION="11 (bullseye)"

VERSION_CODENAME=bullseye

ID=raspbian

ID_LIKE=debian

그리고 쿠버네티스 설치를 시도합니다.

설치는 아래 블로그를 참조했습니다.

https://lance.tistory.com/5#recentComments

라즈베리파이4 에 싱글노드(1대) 쿠버네티스 설치 하기

라즈베리파이4(Raspberrypi 4) 에 쿠버네티스(Kubernetes) 를 설치하는 방법 입니다. 의외로 라즈베리파이(Raspberrypi)+쿠버네티스(Kubernetes) 조합이 한글로는 검색도 잘 안되고 정보가 아직 많이 없는 듯

lance.tistory.com

단, 위 블로그가 시간이 좀 지난 내용인 관계로,

설치버전이 명시된 명령어들만 최신버전 설치를 위해 살짝 바꾸어주었습니다.

바꾼 내용은 아래에 쭉 기술되어 있습니다.

시작합니다.

일단, 아래 명령어를 순차로 입력합니다.

$ sudo swapoff -a

$ sudo systemctl disable dphys-swapfile.service

$ sudo apt update

도커를 설치합니다.

$ sudo apt install -y docker.io도커 설치 후 버전 확인

$ sudo docker info

Client:

Context: default

Debug Mode: false

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 20.10.5+dfsg1

Storage Driver: overlay2

Backing Filesystem: extfs

Supports d_type: true

Native Overlay Diff: true

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 2

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local logentries splunk syslog

Swarm: inactive

Runtimes: runc io.containerd.runc.v2 io.containerd.runtime.v1.linux

Default Runtime: runc

Init Binary: docker-init

containerd version: 1.4.13~ds1-1~deb11u2

runc version: 1.0.0~rc93+ds1-5+deb11u2

init version:

Security Options:

seccomp

Profile: default

cgroupns

Kernel Version: 5.15.32-v7l+

Operating System: Raspbian GNU/Linux 11 (bullseye)

OSType: linux

Architecture: armv7l

CPUs: 4

Total Memory: 3.749GiB

Name: raspberrypi4

ID: 7HVW:45GB:VTVT:BLWI:ZGDU:Y27C:OYH7:PBML:B2QI:Q754:PXPA:KWKX

Docker Root Dir: /var/lib/docker

Debug Mode: false

Registry: https://index.docker.io/v1/

Labels:

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

WARNING: No memory limit support

WARNING: No swap limit support

WARNING: Support for cgroup v2 is experimental잘 된것 같네요.

추가 설정들을 잡아줍니다.

여기서부터는 root로 작업합니다.

$ sudo su

$ cat > /etc/docker/daemon.json <<EOF

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOFcat 부터 EOF까지 멀티라인 명령입니다.

$ sed -i '$ s/$/ cgroup_enable=cpuset cgroup_enable=memory cgroup_memory=1 swapaccount=1/' /boot/cmdline.txt

아래도, cat 부터 EOF까지 멀티라인 명령입니다.

$ cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF$ sysctl --system기계적으로 따라했습니다.

여기까지는 환경설정이었고, 이제 진짜 쿠버네티스 설치입니다.

$ curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -$ cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

deb https://apt.kubernetes.io/ kubernetes-xenial main

EOF$ apt update && sudo apt install -y kubelet kubeadm kubectl

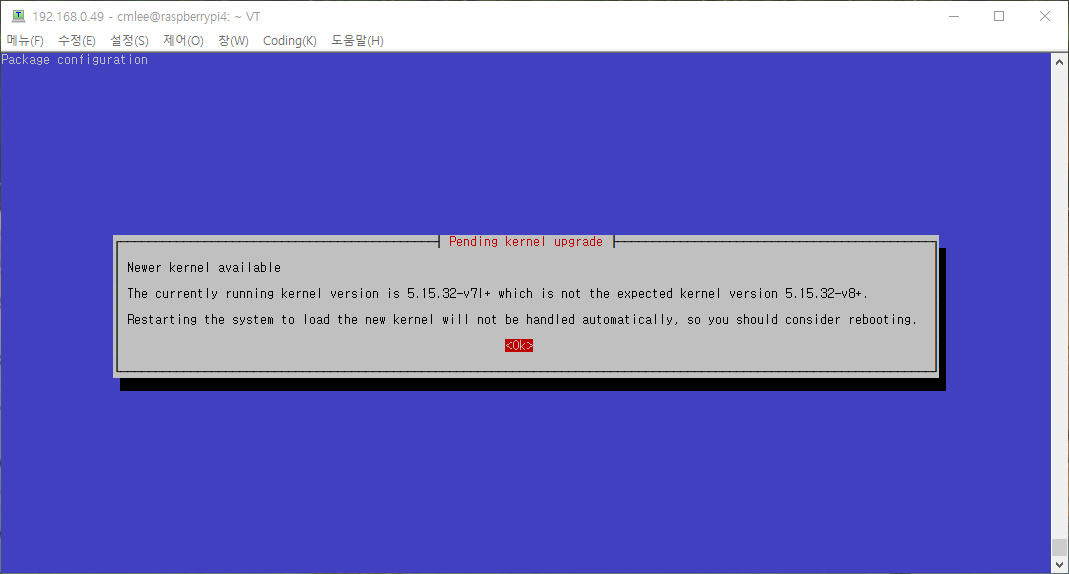

설치 막판에 재부팅을 하라네요.

일단 재부팅을 해주고...

$ reboot now

이제부터는 다시 일반계정으로 작업합니다.

$ sudo apt-mark hold kubelet kubeadm kubectl

쿠버네티스 설치를 완료하고 재부팅도 했으니, 이제 클러스터 구성을 해봅니다.

$ TOKEN=$(sudo kubeadm token generate)

echo $TOKEN$ sudo kubeadm init --token=${TOKEN} --pod-network-cidr=10.244.0.0/16

마지막에 정상적으로 join 명령어가 출력되었네요.

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.49:6443 --token xxxxxxxxxxxxxxxxxxxxxxxxx \

--discovery-token-ca-cert-hash sha256:xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

일반유저를 위한 설정도 잡아줍니다.

$ mkdir -p $HOME/.kube

$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

데몬 재시작

$ sudo systemctl daemon-reload

이제 64bit에서 실패한 그 부분입니다.

$ kubectl get nodes

The connection to the server 192.168.0.49:6443 was refused - did you specify the right host or port?어라라~

왜 안될까요?

ps를 해봅니다.

$ ps -ef |grep apiserver

cmlee 2494 875 0 14:14 pts/0 00:00:00 grep --color=auto apiserver여전히 apiserver가 안떠있네요.

로그를 봅니다.

로그 경로는 아래와 같습니다.

cmlee@raspberrypi4:/var/log/pods $ ls

kube-system_etcd-raspberrypi4_7211ed9f4bce9bff2f0a4446dc25ed48

kube-system_kube-apiserver-raspberrypi4_e904fb4c74434889bc8a97be67ec30db

kube-system_kube-controller-manager-raspberrypi4_bdc632ae97ddfb73d73ffca4cf0bc988

kube-system_kube-scheduler-raspberrypi4_d6016456cb9ad5d9fe4e404f5cf09230

먼저 apiserver 로그

2022-08-02T14:15:47.412538047+01:00 stderr F W0802 13:15:47.412268 1 clientconn.go:1331] [core] grpc: addrConn.createTransport failed to connect

to {127.0.0.1:2379 127.0.0.1 <nil> 0 <nil>}. Err: connection error: desc = "transport: Error while dialing dial tcp 127.0.0.1:2379: connect: connecti

on refused". Reconnecting...etcd를 못찾네요.

etcd 로그를 봅니다.

2022-08-02T14:16:01.040280978+01:00 stderr F {"level":"info","ts":"2022-08-02T13:16:01.040Z","caller":"etcdmain/main.go:50","msg":"successfully notified init daemon"}

2022-08-02T14:16:01.041322298+01:00 stderr F {"level":"info","ts":"2022-08-02T13:16:01.041Z","caller":"embed/serve.go:188","msg":"serving client traffic securely","address":"192.168.0.49:2379"}

2022-08-02T14:16:01.043033237+01:00 stderr F {"level":"info","ts":"2022-08-02T13:16:01.042Z","caller":"embed/serve.go:188","msg":"serving client traff

ic securely","address":"127.0.0.1:2379"}

:1

2022-08-02T14:16:01.043033237+01:00 stderr F {"level":"info","ts":"2022-08-02T13:16:01.042Z","caller":"embed/serve.go:188","msg":"serving client traffic securely","address":"127.0.0.1:2379"}뭐 딱히 에러가 없습니다.

.

.

.

그럼 뜨는 중인가?

잠시 기다렸다가 다시 ps 해봅니다.

$ ps -ef |grep etcd

root 2865 2655 9 14:15 ? 00:00:06 etcd --advertise-client-urls=https://192.168.0.49:2379 --cert-file=/etc/kubernetes/pki/etcd/server.crt --client-cert-auth=true --data-dir=/var/lib/etcd --experimental-initial-corrupt-check=true --initial-advertise-peer-urls=https://192.168.0.49:2380 --initial-cluster=raspberrypi4=https://192.168.0.49:2380 --key-file=/etc/kubernetes/pki/etcd/server.key --listen-client-urls=https://127.0.0.1:2379,https://192.168.0.49:2379 --listen-metrics-urls=http://127.0.0.1:2381 --listen-peer-urls=https://192.168.0.49:2380 --name=raspberrypi4 --peer-cert-file=/etc/kubernetes/pki/etcd/peer.crt --peer-client-cert-auth=true --peer-key-file=/etc/kubernetes/pki/etcd/peer.key --peer-trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt --snapshot-count=10000 --trusted-ca-file=/etc/kubernetes/pki/etcd/ca.crt

root 2923 2796 58 14:16 ? 00:00:27 kube-apiserver --advertise-address=192.168.0.49 --allow-privileged=true --authorization-mode=Node,RBAC --client-ca-file=/etc/kubernetes/pki/ca.crt --enable-admission-plugins=NodeRestriction --enable-bootstrap-token-auth=true --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key --etcd-servers=https://127.0.0.1:2379 --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key --requestheader-allowed-names=front-proxy-client --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt --requestheader-extra-headers-prefix=X-Remote-Extra- --requestheader-group-headers=X-Remote-Group --requestheader-username-headers=X-Remote-User --secure-port=6443 --service-account-issuer=https://kubernetes.default.svc.cluster.local --service-account-key-file=/etc/kubernetes/pki/sa.pub --service-account-signing-key-file=/etc/kubernetes/pki/sa.key --service-cluster-ip-range=10.96.0.0/12 --tls-cert-file=/etc/kubernetes/pki/apiserver.crt --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

cmlee 3092 875 0 14:17 pts/0 00:00:00 grep --color=auto etcd

떴네요.

그럼 다시

$ kubectl get node

NAME STATUS ROLES AGE VERSION

raspberrypi4 NotReady control-plane 4m17s v1.24.3

드디어 떴습니다.

64bit 때처럼 안될까봐 짜증나기 직전이었는데, 다행입니다.

그럼 이제 NotReady 상태를 해결하기 위해 네트워크 플러그인을 설치합니다.

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

The connection to the server 192.168.0.49:6443 was refused - did you specify the right host or port?

어라?

ㅜㅜ

다시 ps를 해보니 etcd, apiserver가 없습니다.

다시 로그를 보니 etcd에 아래와 같은 로그가 보입니다.

pkg/osutil: received terminated signal, shutting down무언가가 etcd를 죽이고 있네요.

주기적으로 이럽니다.

다시 무한 구글링에 돌입합니다.

그러다가 찾은 단서.

https://discuss.kubernetes.io/t/why-does-etcd-fail-with-debian-bullseye-kernel/19696

Why does etcd fail with Debian/bullseye kernel?

I while ago I tried to upgrade my system from Debian buster to Debian bulleye. But etcd stops working. So I downgraded the kernel back to the buster version, and that fixed the problem. I posted my experiences on stack overflow, which appeared to be the co

discuss.kubernetes.io

지금 설치중인 라즈비안 버전이 bullseye 입니다.

내용인즉슨,

Debian buster 버전에서는 정상인데, bullseye 버전에서는 etcd가 계속 죽는다는,

제 증상과 동일했습니다.

원인은 cgroup v2를 사용하기 때문이었고, cgroup v1을 활성화해야 한다는 겁니다.

체크해봅니다.

우선 현재 cgroup v2를 쓰고 있는지 확인

$ sudo docker info

.

.

.

Cgroup Driver: systemd

Cgroup Version: 2

.

.

.

$ findmnt -lo source,target,fstype,options -t cgroup,cgroup2

SOURCE TARGET FSTYPE OPTIONS

cgroup2 /sys/fs/cgroup/unified cgroup2 rw,nosuid,nodev,noexec,relatime,nsdelegate그런것 같네요.

그럼 cgroup v1 활성화

$ sudo vi /boot/cmdline.txt

.............................. cgroup_enable=cpuset cgroup_enable=memory cgroup_memory=1 swapaccount=1 systemd.unified_cgroup_hierarchy=0맨 마지막에 systemd.unified_cgroup_hierarchy=0 을 넣어줍니다.

그리고 재부팅 후에 다시 체크합니다.

$ sudo docker info

.

.

.

Cgroup Driver: systemd

Cgroup Version: 1

.

.

.

$ findmnt -lo source,target,fstype,options -t cgroup,cgroup2

SOURCE TARGET FSTYPE OPTIONS

cgroup2 /sys/fs/cgroup/unified cgroup2 rw,nosuid,nodev,noexec,relatime,nsdelegate

cgroup /sys/fs/cgroup/systemd cgroup rw,nosuid,nodev,noexec,relatime,xattr,name=systemd

cgroup /sys/fs/cgroup/cpu,cpuacct cgroup rw,nosuid,nodev,noexec,relatime,cpu,cpuacct

cgroup /sys/fs/cgroup/cpuset cgroup rw,nosuid,nodev,noexec,relatime,cpuset

cgroup /sys/fs/cgroup/freezer cgroup rw,nosuid,nodev,noexec,relatime,freezer

cgroup /sys/fs/cgroup/net_cls,net_prio cgroup rw,nosuid,nodev,noexec,relatime,net_cls,net_prio

cgroup /sys/fs/cgroup/memory cgroup rw,nosuid,nodev,noexec,relatime,memory

cgroup /sys/fs/cgroup/pids cgroup rw,nosuid,nodev,noexec,relatime,pids

cgroup /sys/fs/cgroup/blkio cgroup rw,nosuid,nodev,noexec,relatime,blkio

cgroup /sys/fs/cgroup/devices cgroup rw,nosuid,nodev,noexec,relatime,devices

cgroup /sys/fs/cgroup/perf_event cgroup rw,nosuid,nodev,noexec,relatime,perf_event된 것 같습니다.

$ kubectl get node

NAME STATUS ROLES AGE VERSION

raspberrypi4 NotReady control-plane 67m v1.24.3

휴~

다시 flannel 설치

$ kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

namespace/kube-flannel created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds created

정상 설치되었습니다.

$ kubectl get node

NAME STATUS ROLES AGE VERSION

raspberrypi4 Ready control-plane 69m v1.24.3

드디어 쿠버네티스 클러스터 구성이 완료되었습니다.

$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-flannel kube-flannel-ds-gl92p 1/1 Running 0 55s

kube-system coredns-6d4b75cb6d-9tkmz 0/1 ContainerCreating 0 65m

kube-system coredns-6d4b75cb6d-sc9rf 0/1 ContainerCreating 0 65m

kube-system etcd-raspberrypi4 1/1 Running 10 (15m ago) 68m

kube-system kube-apiserver-raspberrypi4 1/1 Running 13 (19m ago) 68m

kube-system kube-controller-manager-raspberrypi4 1/1 Running 16 (17m ago) 64m

kube-system kube-proxy-xl5rj 1/1 Running 13 (16m ago) 65m

kube-system kube-scheduler-raspberrypi4 1/1 Running 14 (15m ago) 68m

.

.

.

그런데, coredns 가 Running으로 바뀌지 않네요.

이건 또 뭐지?

$ kubectl describe pod coredns-6d4b75cb6d-9tkmz -n kube-system

Name: coredns-6d4b75cb6d-9tkmz

Namespace: kube-system

.

.

.

.

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 69m default-scheduler 0/1 nodes are available: 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/1 nodes are available: 1 Preemption is not helpful for scheduling.

Warning FailedScheduling 17m default-scheduler 0/1 nodes are available: 1 node(s) had untolerated taint {node.kubernetes.io/not-ready: }. preemption: 0/1 nodes are available: 1 Preemption is not helpful for scheduling.

Normal Scheduled 5m12s default-scheduler Successfully assigned kube-system/coredns-6d4b75cb6d-9tkmz to raspberrypi4

Warning FailedMount 5m11s kubelet MountVolume.SetUp failed for volume "config-volume" : failed to sync configmap cache: timed out waiting for the condition

Warning FailedCreatePodSandBox 5m11s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "f83da8c7d53b70de713fae84b2ad312863b407ced38006087423a9dcf425b054": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 4m56s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "24ddf83c06bee76de61e1014539552750cfeda2da558d81f357b86ca6c0c0b14": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 4m44s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "33a6bfbe449bfb80e8ca94c909e2db42e17ed3886a11919bc3b92ff2fa004775": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 4m30s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "7462cb95e9d5dc64773a6796cf2cde59ab95f5d0ccd179d70f34d8fb6915d52c": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 4m19s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "b14631d369e7099b61df08699e7d2b1c29a0f7292ab849fba299c9c88a386edb": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 4m8s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "5839e11eba03161ab59efee21a4f485ba7db7428435fb7eeb1572f2c7772b811": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 3m56s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "731193c5529612cdc3ce662b0294d49b27f46444a1593f5bfd7a26df29b63ac1": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 3m44s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "3622fd2a17c463b88134aac6dcfd785cd97f75cc72860285bf690bf37aa5d5d4": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 3m30s kubelet Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "d66e88c5a0bbf666e036f777ffc9368943a49fff25bee027891413520f9e08f0": failed to find plugin "loopback" in path [/usr/lib/cni]

Warning FailedCreatePodSandBox 3s (x16 over 3m16s) kubelet (combined from similar events): Failed to create pod sandbox: rpc error: code = Unknown desc = failed to setup network for sandbox "123ecb8dd9d2c4f341bc9e7fdf94d4d714fe6b6fa0672d10be2225a2d2448221": failed to find plugin "loopback" in path [/usr/lib/cni]

failed to find plugin "loopback" in path [/usr/lib/cni]

flannel 설치했는데 cni 에러가 왜 뜨는 걸까요?

이제 거의 다 온 것 같은데, 이건 내일 다시 봐야겠네요.

'Raspberry pi' 카테고리의 다른 글

| 라즈베리파이 쿨링팬 제어 쿠버네티스 데몬셋으로 실행하기 : nodejs, GPIO 접근권한 (0) | 2022.08.04 |

|---|---|

| 라즈베리파이4 라즈비안 쿠버네티스 cni 에러 : failed to find plugin "loopback" in path [/usr/lib/cni] (0) | 2022.08.03 |

| 라즈베리파이4 라즈비안 64bit 쿠버네티스 설치 실패 (0) | 2022.08.02 |

| 라즈베리파이 쿨링팬 제어 nodejs 도커로 실행하기 :GPIO 접근권한 (0) | 2022.07.31 |

| 라즈베리파이 온도에 따라 냉각팬 조절하기 : nodejs (0) | 2022.07.29 |